Turn your server into a family game streaming hub

• Published:If you've read my My hardware post, you've seen that I had a gaming PC at some point. I cannibalized its graphics card for my server to run speech-to-text models on it for my Home Assistant, which means no more gaming for me.

Or does it?

VM gaming

For a while, before I ran the STT model on it, I created a gaming VM, passed through the graphic card and gamed on it. It worked fine!

The server was under my desk, so I could just connect the output of the GPU to my screen, plug in (and pass through) my keyboard, mouse and headphones and off I went.

Still, not everything is great with VM gaming:

- it's a hassle to turn on the VM, I had to SSH into the server to turn it on

- GPU can only be used by the VM, you can't share it with other processes on the server

- you have to be smart about disk space management, you either go with thinly provisioned storage (speed penalty), or possibly over-provision

- you can't share a VM for gaming with multiple people practically

So while it worked ok for my use case, I yearned for more.

That's when I discovered container gaming.

Container gaming

While trying to set up headless LXC container with Incus (see my post about setting up Incus with SSO), I came across Games on Whales.

Their tagline is "Stream multiple virtual desktops and games running in Docker!", and that is exactly what it does.

On your server, you run their container called wolf which itself runs a Sunshine-like application.

You then pair it with your Moonlight client running on your laptop or TV and once that is done, you can start up various applications or desktops.

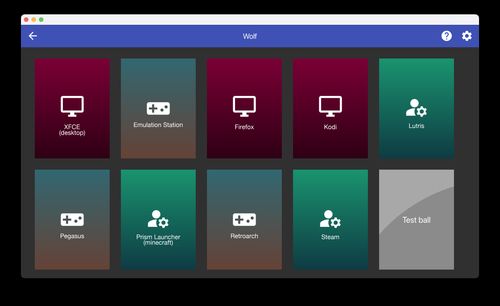

They have a good default selection:

You can add your own applications/containers later if you wish, I have yet to do so.

Moonlight passes through your input devices (keyboard+mouse/gamepad) and streams back the application GUI.

Sunshine + Moonlight

If you haven't heard about either of them, they are desktop streaming protocols, not that different from VNC or RDP, but made for low-latency high-quality remote gaming or desktop streaming.

Sunshine is the 'server' part, or something that you install on the PC you want to stream from, while the Moonlight client is installed on your laptop or TV, where you want to stream to.

How it works under the hood

I won't go into too much detail, but the gist of it is that it relies heavily on containers.

So the 'main' container (wolf) acts as a Sunshine server, allowing Moonlight clients to pair with it.

It's not a real Sunshine server though, it just talks the talk.

Currently you pair it by opening a URL wolf gives you in its container logs (something like https://your.host/pin/#123D3240715B5C74), which leads to an input form where you can input the PIN that was given to you by the Moonlight client.

Once paired, you can view the applications in Moonlight.

When starting an application, wolf starts a container related to that application, mounts your data and your graphics card and starts the stream.

For example if you selected the Steam app, wolf will:

- start

SteamApp_1234567890container, where the number is the internal ID of your user - create a folder

1234567890(on your server) where it will store all your user app data - mount a sub-folder into the container, e.g.

1234567890/Steamwill be mounted into the container at~/ - mount your graphics card so that it will be used by Steam to render games

- set up audio (via I think another separate container?)

- map through your input devices

- probably more stuff that I'm not aware (yet)

The result is amazing. It works great, the latency is very low, I was getting 60 FPS of crisp images and the sound was of high quality.

To give you some concrete numbers:

- On my laptop over 5GHz WiFi: steady 60 FPS, 6ms network latency, 4ms decoding latency

- On my NVidia Shield TV over gigabit cable: 1920x1080 at steady 60 FPS, 1ms network latency, 1.5ms decoding latency

Not all is roses though.

Security Considerations

One critical caveat: For wolf to spawn new containers, you must give it access to the Docker/Podman socket.

This essentially gives wolf root-level control over your entire container infrastructure, meaning it can start, stop, and access any container on your system.

How significant this risk is depends on your setup. I run 95% of my services on bare metal, so not in containers, and the other 5% are stateless services (Gotenberg and Apache Tika) and TubeSync. So nothing really private.

You have been warned!

Setting it all up

For this part I'm just going to share my NixOS module as it is. It contains some of my specifics, but you'll get the gist of it and you'll be able pick and choose the parts that are of interest for you.

I followed the "Nvidia (Manual)" method from their guide since I have an NVidia GeForce 1070 GPU.

Here is the module:

{

config,

lib,

pkgs,

...

}:

with lib;

let

# This config itself

cfg = config.my.services.wolf;

# Name the container

containerName = "wolf";

# A reference of ports that are use by wolf

ports = {

https = 47984;

http = 47989;

control = 47999;

rtsp = 48010;

video = 48100;

audio = 48200;

};

# A shortcut

backend = config.virtualisation.oci-containers.backend;

# Name typo-less reference

nvidiaVolumeName = "nvidia-driver-vol";

in

{

options.my.services.wolf = with lib.types; {

enable = mkEnableOption "wolf";

# Where we'll persist our user data and config

dataDir = mkOption {

type = path;

default = "/var/my/wolf";

};

# On which address to listen on

httpAddress = mkOption {

type = str;

default = "127.0.0.1";

};

httpPort = mkOption {

type = int;

default = ports.http;

};

# On which domain it will be accessible

domain = mkOption {

type = str;

default = "wolf.${my.domain}"; # Resolves to something like `wolf.example.com`

};

};

config = mkIf cfg.enable {

# Enable NVIDIA Container Toolkit if GPU support is requested

hardware.nvidia-container-toolkit.enable = true;

# Needed to get working virtual input devices

boot.kernelModules = [

"uinput"

];

# The `virtualisation.oci-containers.containers."${containerName}" ` option below creates this systemd service

# We add a preStart script to it, to setup nvidia volume and do some cleanup

systemd.services."${backend}-${containerName}" = {

preStart = ''

# Setup the nvidia volume

${pkgs.curl}/bin/curl https://raw.githubusercontent.com/games-on-whales/gow/master/images/nvidia-driver/Dockerfile | ${backend} build -t gow/nvidia-driver:latest -f - --build-arg NV_VERSION=$(cat /sys/module/nvidia/version) .

${backend} create --rm --mount source=${nvidiaVolumeName},destination=/usr/nvidia gow/nvidia-driver:latest sh

# Stop/remove games-on-whales containers if they are running

ids=$(${backend} ps --all --format ' ' | grep 'games-on-whales' | ${pkgs.busybox}/bin/awk '{print $1}')

if [ -n "$ids" ]; then

${backend} stop $ids || true

${backend} rm $ids || true

fi

'';

};

# Define the main `wolf` container

virtualisation.oci-containers.containers."${containerName}" = {

autoStart = true;

image = "ghcr.io/games-on-whales/wolf:stable";

volumes = [

"${cfg.dataDir}:/etc/wolf:rw"

"/run/${backend}/${backend}.sock:/var/run/docker.sock:rw"

"/dev/:/dev/:rw"

"/run/udev:/run/udev:rw"

"${nvidiaVolumeName}:/usr/nvidia:rw"

];

devices = [

"/dev/dri"

"/dev/uinput"

"/dev/uhid"

"/dev/nvidia-uvm"

"/dev/nvidia-uvm-tools"

"/dev/nvidia-caps/nvidia-cap1"

"/dev/nvidia-caps/nvidia-cap2"

"/dev/nvidiactl"

"/dev/nvidia0"

"/dev/nvidia-modeset"

];

ports = [

"${toString ports.https}:47984/tcp"

"${toString cfg.httpPort}:47989/tcp"

"${toString ports.control}:47999/udp"

"${toString ports.rtsp}:48010/tcp"

"${toString ports.video}:48100/udp"

"${toString ports.audio}:48200/udp"

];

extraOptions = [

"--pull=newer"

"--device-cgroup-rule"

"c 13:* rmw"

];

environment = {

NVIDIA_DRIVER_VOLUME_NAME = nvidiaVolumeName;

};

};

# Open up the ports on the VM

networking.firewall = {

allowedTCPPorts = [

ports.https

cfg.httpPort

ports.rtsp

];

allowedUDPPorts = [

ports.control

ports.video

ports.audio

];

};

services.udev.extraRules = ''

# Allows Wolf to acces /dev/uinput

KERNEL=="uinput", SUBSYSTEM=="misc", MODE="0660", GROUP="input", OPTIONS+="static_node=uinput"

# Allows Wolf to access /dev/uhid

KERNEL=="uhid", TAG+="uaccess"

# Move virtual keyboard and mouse into a different seat

SUBSYSTEMS=="input", ATTRS{id/vendor}=="ab00", MODE="0660", GROUP="input", ENV{ID_SEAT}="seat9"

# Joypads

SUBSYSTEMS=="input", ATTRS{name}=="Wolf X-Box One (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf PS5 (virtual) pad", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf gamepad (virtual) motion sensors", MODE="0660", GROUP="input"

SUBSYSTEMS=="input", ATTRS{name}=="Wolf Nintendo (virtual) pad", MODE="0660", GROUP="input"

'';

systemd.tmpfiles.rules = [

# Create he data dir if it does not exist

"d ${cfg.dataDir} 750 root root"

# create a small file that indicates to borgmatic that this folder should not be backed up

"f ${cfg.dataDir}/${config.my.services.borgmatic.noBackupFilename} 755 root root"

];

# I use ephemeral root partition, so we indicate that this data is to be persisted across reboots

# This maps to some Impermanence option

my.persisted.directories = [

cfg.dataDir

];

# Make the web part accessible

my.services = {

proxy.reverseProxies = [

{

from = cfg.domain;

to = "${cfg.httpAddress}:${toString cfg.httpPort}";

}

];

# Only allow accessing this internally, from LAN

authelia = {

accessRules = [

{

domain = cfg.domain;

whenInternal = true;

policy = "two_factor";

}

];

};

};

};

}

I'm using the podman backend on my server, but it should work the same for docker.

You can restart the container (and all of its associated containers) with systemctl restart podman-wolf (or systemctl restart docker-wolf if you're using the docker backend).

A few tips and tricks

Sharing user folders between multiple clients

wolf treats each paired client as a separate user by default, which means each client gets its own home folder in the containers.

If you have multiple devices, it is possible to share the home folder by editing the config file by hand a bit.

Pair the second client to get the entries and then open up the config file at /var/my/wolf/cfg/config.toml.

Find the [[paired_clients]] entries and make sure that both of your clients have the same app_state_folder ID by using the ID of your first client (that likely already has some data in that folder).

I restarted the service for good measure.

Mounting additional folders into the containers

If you have some ROMs (or other files) on your server, that you want to make available to your RetroArch container (and others), you can do that by creating a volume mapping.

Edit the /var/my/wolf/cfg/config.toml file.

Find [[apps]] with the title of the application you want to add the mapping to (e.g. title = 'RetroArch') and edit mounts array to add your volume (it's in the [apps.runner] section).

Here is how I mounted my downloads folder from the server (/mnt/pool/download/) to the container at /download.

mounts = [ '/mnt/pool/download/:/download:rw' ]Now I can easily download mods or other things on the server and have the downloads show up in the containers.

Don't forget to restart!

Conclusion

I've been using this setup for a few weeks now and have found it to be reliable and performant.

I'm using it on my laptop and on my NVidia Shield which is connected to the TV. I can pass through gamepads and keyboard/mouse.

For me, performance- and usability-wise it's on par with Parsec, which has been my go-to service for streaming in the past, but without any external services or accounts. It's all self-hosted.

Since I'm the only one in my household that games at this moment, I sadly can't comment on how well the multi-user aspect works. I'll let you know when my kids start gaming. Hopefully the GPU prices will have come down by then and we'll be able to pool our money and replace my aging GeForce 1070.

I hope this post has been valuable and let me know if you run into any issues (email is on my homepage)!